Backend For Frontend (BFF) Pattern

In a microservices architecture, each microservice exposes a set of (typically) fine-grained endpoints. This fact can impact the client-to-microservice communication.

A direct client-to-microservice communication architecture could be good enough for a small microservice-based application, especially if the client app is a server-side web applicatio. However, when you build large and complex microservice-based applications (for example, when handling dozens of microservice types), and especially when the client apps are remote mobile apps or SPA web applications, that approach faces a few issues.

Consider the following questions when developing a large application based on microservices:

- How can client apps minimize the number of requests to the back end and reduce chatty communication to multiple microservices?

Interacting with multiple microservices to build a single UI screen increases the number of round trips across the Internet. This approach increases latency and complexity on the UI side. Ideally, responses should be efficiently aggregated in the server side. This approach reduces latency, since multiple pieces of data come back in parallel and some UI can show data as soon as it’s ready.

- How can you handle cross-cutting concerns such as authorization, data transformations, and dynamic request dispatching?

Implementing security and cross-cutting concerns like security and authorization on every microservice can require significant development effort. A possible approach is to have those services within the Docker host or internal cluster to restrict direct access to them from the outside, and to implement those cross-cutting concerns in a centralized place, like an API Gateway.

- How can client apps communicate with services that use non-Internet-friendly protocols?

Protocols used on the server side (like AMQP or binary protocols) are not supported in client apps. Therefore, requests must be performed through protocols like HTTP/HTTPS and translated to the other protocols afterwards. A man-in-the-middle approach can help in this situation.

- How can you shape a facade especially made for mobile apps?

The API of multiple microservices might not be well designed for the needs of different client applications. For instance, the needs of a mobile app might be different than the needs of a web app. For mobile apps, you might need to optimize even further so that data responses can be more efficient. You might do this functionality by aggregating data from multiple microservices and returning a single set of data, and sometimes eliminating any data in the response that isn’t needed by the mobile app. And, of course, you might compress that data. Again, a facade or API in between the mobile app and the microservices can be convenient for this scenario.

Why consider API Gateways instead of direct client-to-microservice communication

In a microservices architecture, the client apps usually need to consume functionality from more than one microservice. If that consumption is performed directly, the client needs to handle multiple calls to microservice endpoints. What happens when the application evolves and new microservices are introduced or existing microservices are updated? If your application has many microservices, handling so many endpoints from the client apps can be a nightmare. Since the client app would be coupled to those internal endpoints, evolving the microservices in the future can cause high impact for the client apps.

Therefore, having an intermediate level or tier of indirection (Gateway) can be convenient for microservice-based applications. If you don’t have API Gateways, the client apps must send requests directly to the microservices and that raises problems, such as the following issues:

- Coupling: Without the API Gateway pattern, the client apps are coupled to the internal microservices. The client apps need to know how the multiple areas of the application are decomposed in microservices. When evolving and refactoring the internal microservices, those actions impact maintenance because they cause breaking changes to the client apps due to the direct reference to the internal microservices from the client apps. Client apps need to be updated frequently, making the solution harder to evolve.

- Too many round trips: A single page/screen in the client app might require several calls to multiple services. That approach can result in multiple network round trips between the client and the server, adding significant latency. Aggregation handled in an intermediate level could improve the performance and user experience for the client app.

- Security issues: Without a gateway, all the microservices must be exposed to the “external world”, making the attack surface larger than if you hide internal microservices that aren’t directly used by the client apps. The smaller the attack surface is, the more secure your application can be.

- Cross-cutting concerns: Each publicly published microservice must handle concerns such as authorization and SSL. In many situations, those concerns could be handled in a single tier so the internal microservices are simplified.

What is the API Gateway pattern?

When you design and build large or complex microservice-based applications with multiple client apps, a good approach to consider can be an API Gateway. This pattern is a service that provides a single-entry point for certain groups of microservices. It’s similar to the Facade Pattern from object-oriented design, but in this case, it’s part of a distributed system. The API Gateway pattern is also sometimes known as the “backend for frontend” (BFF) because you build it while thinking about the needs of the client app.

Therefore, the API gateway sits between the client apps and the microservices. It acts as a reverse proxy, routing requests from clients to services. It can also provide other cross-cutting features such as authentication, SSL termination, and cache.

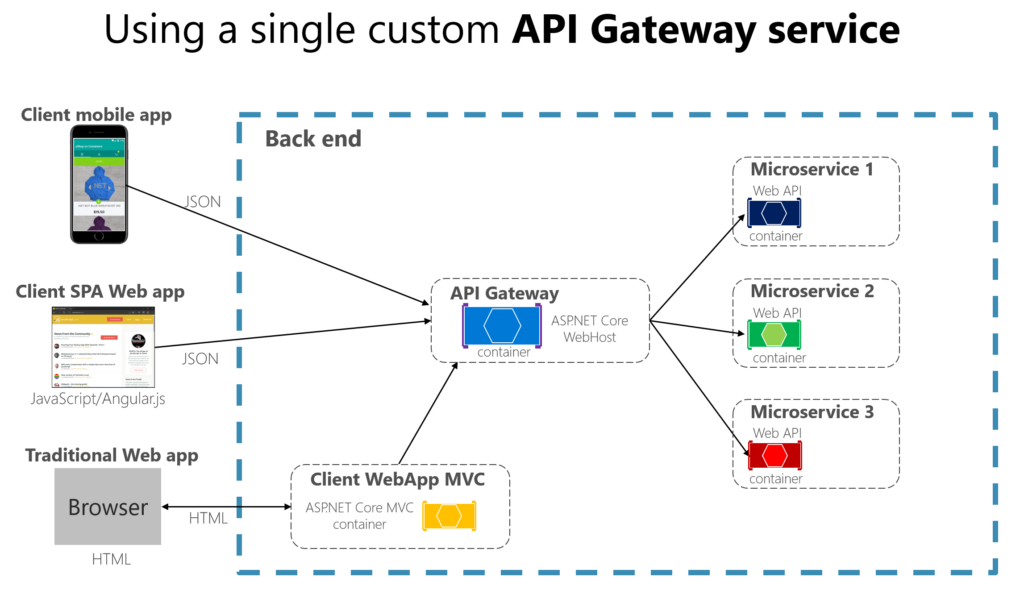

Apps connect to a single endpoint, the API Gateway, that’s configured to forward requests to individual microservices. In this example, the API Gateway would be implemented as a custom ASP.NET Core WebHost service running as a container.

It’s important to highlight that in that diagram, you would be using a single custom API Gateway service facing multiple and different client apps. That fact can be an important risk because your API Gateway service will be growing and evolving based on many different requirements from the client apps. Eventually, it will be bloated because of those different needs and effectively it could be similar to a monolithic application or monolithic service. That’s why it’s very much recommended to split the API Gateway in multiple services or multiple smaller API Gateways, one per client app form-factor type, for instance.

You need to be careful when implementing the API Gateway pattern. Usually it isn’t a good idea to have a single API Gateway aggregating all the internal microservices of your application. If it does, it acts as a monolithic aggregator or orchestrator and violates microservice autonomy by coupling all the microservices.

Therefore, the API Gateways should be segregated based on business boundaries and the client apps and not act as a single aggregator for all the internal microservices.

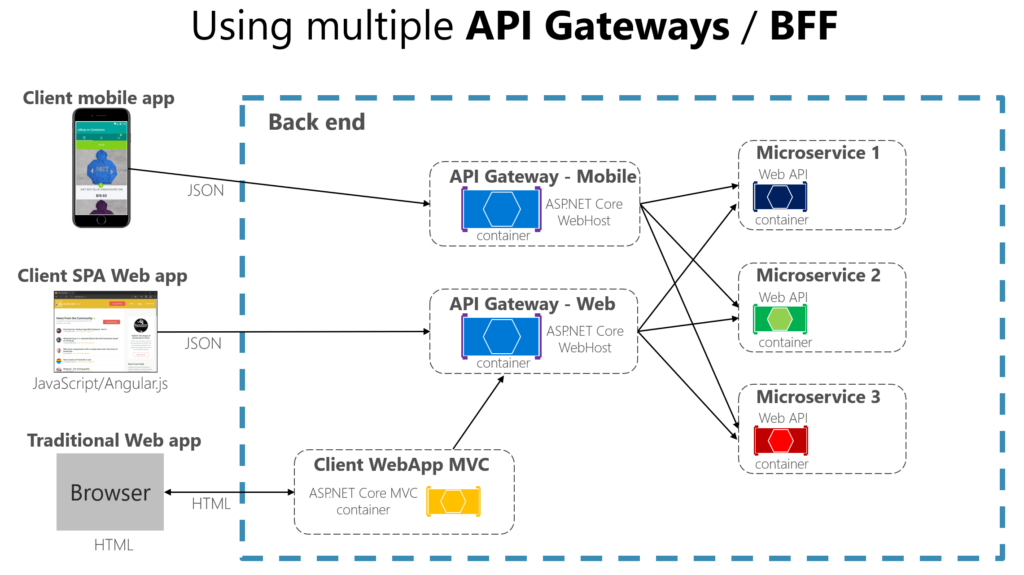

When splitting the API Gateway tier into multiple API Gateways, if your application has multiple client apps, that can be a primary pivot when identifying the multiple API Gateways types, so that you can have a different facade for the needs of each client app. This case is a pattern named “Backend for Frontend” (BFF) where each API Gateway can provide a different API tailored for each client app type, possibly even based on the client form factor by implementing specific adapter code which underneath calls multiple internal microservices, as shown in the following image:

Above diagram shows API Gateways that are segregated by client type; one for mobile clients and one for web clients. A traditional web app connects to an MVC microservice that uses the web API Gateway. The example depicts a simplified architecture with multiple fine-grained API Gateways. In this case, the boundaries identified for each API Gateway are based purely on the “Backend for Frontend” (BFF) pattern, hence based just on the API needed per client app. But in larger applications you should also go further and create other API Gateways based on business boundaries as a second design pivot.

Main features in the API Gateway pattern

An API Gateway can offer multiple features. Depending on the product it might offer richer or simpler features, however, the most important and foundational features for any API Gateway are the following design patterns:

Reverse proxy or gateway routing. The API Gateway offers a reverse proxy to redirect or route requests (layer 7 routing, usually HTTP requests) to the endpoints of the internal microservices. The gateway provides a single endpoint or URL for the client apps and then internally maps the requests to a group of internal microservices. This routing feature helps to decouple the client apps from the microservices but it’s also convenient when modernizing a monolithic API by sitting the API Gateway in between the monolithic API and the client apps, then you can add new APIs as new microservices while still using the legacy monolithic API until it’s split into many microservices in the future. Because of the API Gateway, the client apps won’t notice if the APIs being used are implemented as internal microservices or a monolithic API and more importantly, when evolving and refactoring the monolithic API into microservices, thanks to the API Gateway routing, client apps won’t be impacted with any URI change.

Requests aggregation. As part of the gateway pattern you can aggregate multiple client requests (usually HTTP requests) targeting multiple internal microservices into a single client request. This pattern is especially convenient when a client page/screen needs information from several microservices. With this approach, the client app sends a single request to the API Gateway that dispatches several requests to the internal microservices and then aggregates the results and sends everything back to the client app. The main benefit and goal of this design pattern is to reduce chattiness between the client apps and the backend API, which is especially important for remote apps out of the datacenter where the microservices live, like mobile apps or requests coming from SPA apps that come from JavaScript in client remote browsers. For regular web apps performing the requests in the server environment (like an ASP.NET Core MVC web app), this pattern is not so important as the latency is very much smaller than for remote client apps.

Depending on the API Gateway product you use, it might be able to perform this aggregation. However, in many cases it’s more flexible to create aggregation microservices under the scope of the API Gateway, so you define the aggregation in code.

Cross-cutting concerns or gateway offloading. Depending on the features offered by each API Gateway product, you can offload functionality from individual microservices to the gateway, which simplifies the implementation of each microservice by consolidating cross-cutting concerns into one tier. This approach is especially convenient for specialized features that can be complex to implement properly in every internal microservice, such as the following functionality:

- Authentication and authorization

- Service discovery integration

- Response caching

- Retry policies, circuit breaker, and QoS

- Rate limiting and throttling

- Load balancing

- Logging, tracing, correlation

- Headers, query strings, and claims transformation

- IP allowlisting

Benefits of BFF

The BFF pattern comes with many potential benefits.

- May reduce the chattiness of the client with an implementation by serving as an aggregator and coordinator of requests

- Smaller and less computationally complex than an all-encompassing monolithic API

- Faster time to market as front end teams can have dedicated back end teams serving their unique needs, vs. a combined monolithic team servicing the needs of competing constituent front end teams

- May offer better results for each front end constituent, vs “in between” solutions that are optimized for neither constituent

Drawbacks of the BFF PATTERN

There are two very obvious drawbacks of the BFF pattern implementation, dealing with fault isolation and the propagation of blast radius for any failure. A handful of additional drawbacks need to be remunerated if BFFs are employed.

- Fan Out: If engineers and architects aren’t careful, there can be a high degree of fan-out between any BFF and associated services it calls. The failure of any of those services can bring down the entire BFF for the interface in question

- Fuse: Each service, if it responds to multiple BFFs has the capability of bringing down all BFFs and as a result halt all operations. Each individual service then becomes a fuse anti-pattern

- Duplication and Lower Reuse: There is a high probability that each BFF may implement similar capabilities with different teams, easily doubling (or more) the cost of development. The benefits of faster time to market may warrant this downside, but if it is a major concern some lightweight overhead associated with identifying duplicate efforts may help identify opportunities for shared libraries that get developed once

- More Services and Components: As we segment backends for each constituent frontend, the number of deployable units increases. This becomes less of a concern if teams have good DevOps practices, great monitoring, lots of automation and good ownership around quality of releases

How to solve BFF Associated Problems

- Solve Fan Out: Because we don’t want any single subsequent service that any BFF coordinates to take down the BFF entirely, we should implement fault isolation. Each downstream service ideally will have its own BFF termination point for each modality. While this increases the number of deployments again, we get significantly better fault isolation and higher availability. If coordination is necessary between downstream components, rethink the reasons for splitting each subsequent components.

- Remediate Fuses: This is virtually impossible to solve without dedicating a service to each modality/interface BFF. Dedication of deployed services will work if databases aren’t involved, but will not work if each subsequent service needs to share a database as the database now becomes a fuse. So, if a service need not use a database consider separate deployments for maximum availability. If databases are required, accept the fuse as technical debt that is partially remediated by eliminating fan-out.

- Reuse: This may or not be a problem with your implementation. But if you suspect that functionality will overlap significantly between modalities, it may make sense to ensure teams (perhaps scrum masters and product owners) are identifying “large” work efforts that must be shared. Having teams implement these larger needs in reusable libraries will lower development costs and decrease time to market for other capabilities.

- Service Multiplication: As mentioned above, ensuring that teams “own” their services through the service life and enabling easy release and interaction through automation solves nearly all the concerns of a larger number of deployable services.